Introduction

For decades, people have suffered from mental stress because of various reasons such as job, family, relationship, and finance. Repetition of acute stress and chronic stress has been well-known to incur negative impacts on people such as increasing risk of cardiovascular disease, diabetes, mental disorders, etc. [1]. In the era of well-being, people have been paying more and more attention on their stress management in everyday life. For the appropriate management, it is essential to accurately recognize one’s stress state.

The IoT (Internet of Things) and artificial intelligence technologies meet huge demands of stress recognition. Through the wearables and IoT devices, we can monitor and collect various sensor signals. The collected signal data is examined and analyzed to recognize human stress states based on a great help of the artificial intelligence techniques. The project CoSMoS (Collaborative Sensing & Managing on Stress) is to study various sensor signals regarding stress state, to analyze and demonstrate multiple hypotheses in terms of stress recognition, to design the artificial intelligence-based recognition model for stress states, and to deliver useful and helpful feedbacks to users.

stressExplorer

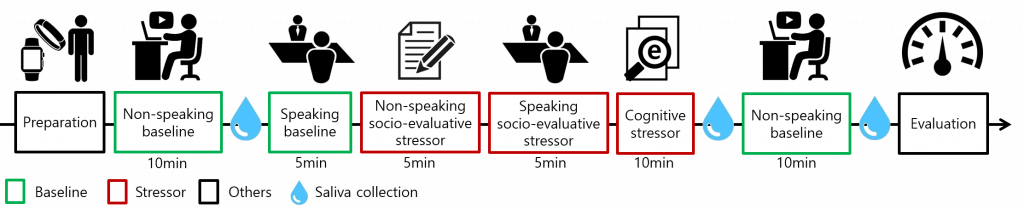

stressExplorer (i) supports the experimental design and setup with various wearable and smart devices, (ii) processes and analyzes the collected data to study interesting patterns of various sensor data under stressful episodes and (iii) designs various stress recognition models while regulating multiple parameters of both the data itself and the artificial intelligence methods. Based on stressExplorer, we construct a stress-inducing scenario including several stressors and baselines by referring conventional works as shown in Figure 1.

Figure 1 : Stress inducing scenario in laboratory utilizing several baselines and stressors

stressExplorer exploits three wearable devices Zephyr “BioModule”[2], LG “Watch Style”[3], and Empatica “E4″[4] and additionally monitors a person using VSTARCAM “100E”[5] that is an IP camera. A person under our experimental scenario wears “BioModule” on his/her chest and “Watch Style” and “E4” on both wrists (“E4” on non-dominant side) as shown in Figure 2. Through the wearables, we monitor ECG (Electrocardiography), EDA (Electrodermal Activity), BVP (Blood Volume Pulse), body temperature, respiration, posture, accelerometer, and gyroscope.

Figure 2 : Experimental scene of a subject with three wearable devices

Our group conducted two human subject researches which were approved by Institutional Review Board (7001988-201712-HR-101-02 and 7001988-201706-HR-201-03). For each research, we gathered 100 healthy subjects of 20s and 30s. We’ve been submitting various papers regarding those experiments to several conferences and journals.

[1] http://www.who.int/features/factfiles/mental_health/mental_health_facts/en/

[2] https://www.zephyranywhere.com/

[3] http://www.lg.com/us/smart-watches/lg-W270-Titanium-style

[4] Garbarino, M., Lai, M., Bender, D., Picard, R. W., & Tognetti, S. (2014). Empatica E3 – A wearable wireless multi-sensor device for real-time computerized biofeedback and data acquisition. In 2014 EAI 4th International Conference on Wireless Mobile Communication and Healthcare (Mobihealth) 39-42.

[5] http://www.vstarcam.co.kr/product.php?uid=25&model=VSTARCAM-100E

Relavant Publications

- Saewon Kye, Junhyung Moon, Juneil Lee, Inho Choi, Dongmi Cheon, Kyoungwoo Lee, “Multimodal data collection framework for mental stress monitoring”, 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers (UbiComp ??7), Sep, 2017.

Research funding

- “Emotional Intelligence Technology to Infer Human Emotion and Carry on Dialogue Accordingly (R0124-16-0002)”

- Institute for Information & Communications Technology Promotion(IITP) grant funded by the Korea government(MSIP)

- 14M USD (2016~2021)